Geoactive at DIGEX 2023 Conference in Oslo

This years DIGEX conference hosted by GeoPublishing was held in the winter wonderland of Oslo, Norway during the last week of March. It was an...

As we continue this journey into the "Fourth industrial revolution" towards a more robust and accessible Artificial Intelligence (AI ) environment, Human Intelligence (HI) cannot be forgotten and must not be ignored.

We are all aware of that old adage "rubbish in, rubbish out".

The use of AI and Machine Learning (ML) covers a wide range of applications, from the very general, like data mining vast datastores, to the very specific, like microfossil species identification from thin section photographs. However, a common theme throughout any discussion regarding AI is the need for accurate and robust QC of all data being added to any AI model.

Ensuring that data is fit for its intended purpose and conforms to data quality requirements such as completeness, accuracy, and validity, is an essential part of any AI and ML workflow. However, ensuring this can be a time-consuming part of any subsurface project. In some cases, up to 90% of the allocated project time! The arrival of ML and AI tools have the potential to reduce the time spent carrying out data QC without compromising on accuracy and precision of the data.

For any AI model to be deployed and applied to real world situations, there is a need for a good data set for the models to be trained on, ‘fact checked’ if you will, which for numeric data means with minimal errors, biases, and noise. For example, you would want to reduce biases, or avoid having offsets, in the porosity data that you are training on just because the petrophysical software you used does not have a consistent definition of porosity. It is surprising how many products are inconsistent when you look under the hood.

The results from the model also need to be ‘sense checked’ because the algorithm has no concept of geology or the real-world. There will always be anomalous results, and recognizing whether is just poor quality data, an artefact of the machine, or something more significant, and the deciding what to do about it, is still the preserve of us mere mortals. Well, for now anyway.

You shouldn't. (A controversial statement to be sure.)

But lets put it in context.

The benefits of utilizing AI models during subsurface project interpretation and data analysis can be as simple as improving efficiency or as complex as identifying and populating models to generate final results. These AI models must be trained and to do that, and to train them, the expertise and experience of the technical experts must be used.

Applying AI technology does not require the removal of subject matter experts from a project. Quite the contrary. Utilizing AI in subsurface workflows should free up those experts to have more time to spend on the more creative and technically complex elements of a project.

You should use AI in the Second place. AFTER the QC of your data is complete with the removal of any of those biases and errors identified and your AI models are trained appropriately.

The computer is a product of many smart people building on and expanding the technology and capability of a machine. But it is just a machine. Human Intelligence comes from experience, and the understanding that not everything can (or should) fit inside nice, neat boxes to explain them. This is a key understanding when it comes to the subsurface. Photograph: Megan Lorenz

The computer is a product of many smart people building on and expanding the technology and capability of a machine. But it is just a machine. Human Intelligence comes from experience, and the understanding that not everything can (or should) fit inside nice, neat boxes to explain them. This is a key understanding when it comes to the subsurface. Photograph: Megan Lorenz

The subsurface can be evaluated using remote sensing and measurements and with the history of that data and the expertise that has developed over the last decades, it seems a waste to ignore all that human intelligence for blind trust in a computer. Utilizing the power of AI after the integration of HI can expand and build upon the HI, not replace it.

Human intelligence can quickly see where a data point, under certain circumstance, appears to be correct but in actual fact is not.

Artificial and Human Intelligence, for the subsurface, are not an either/or choice. There are tools available, such as our very own IC, that can be utilized to build that visual QC of data easily and quickly when working with down-hole data.

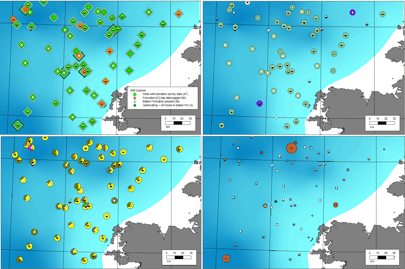

IC allows you to, not only map the location of wells but, build a series of queries to dig into the data coverage you have and also highlight any geographical anomalies in the data. Having the power to see the bigger picture and see variations from the data in a different context, from well to well or within adjacent zones that may be mis-picked...can highlight data that required further QC more so than a simple AI working in isolation.

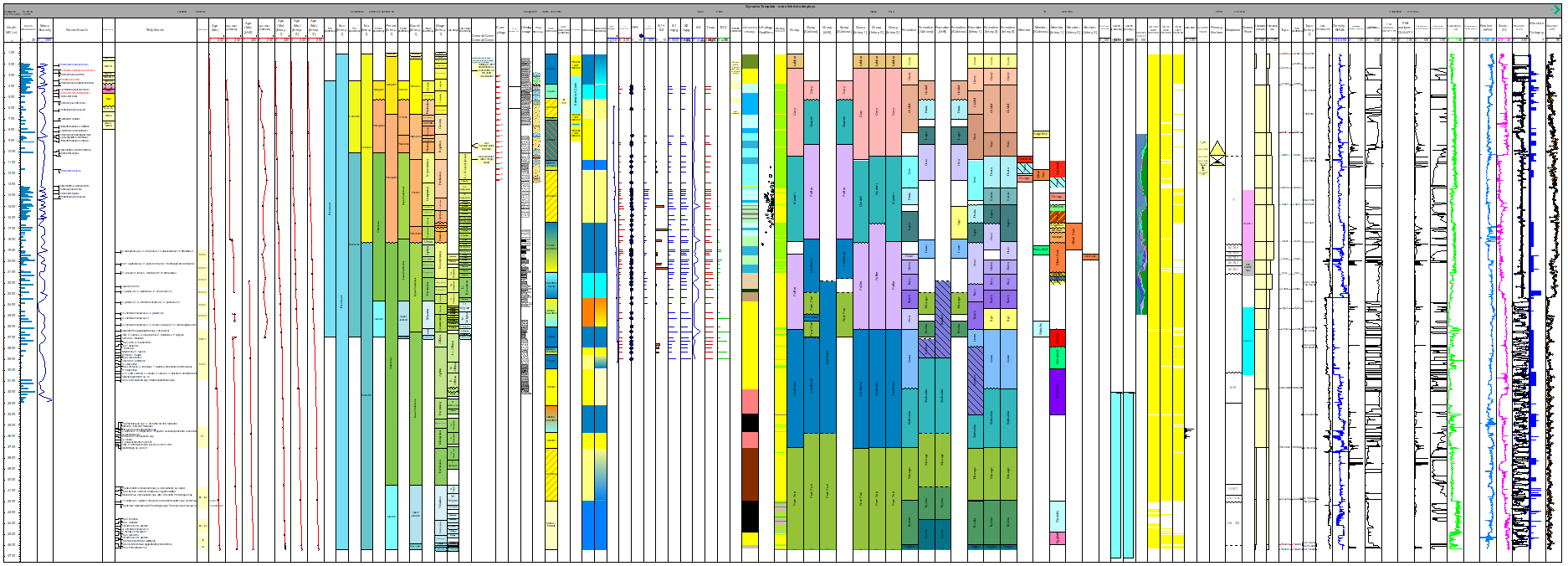

Integrating down-hole data and being able to present all interpretations of all data at the same time in a simple button click, text, numerical, photos and logs all in a single display can quickly highlight any depth shift that may be required at the very least, and complete data busts at the most. The ability to compare all data includes those historical or personal interpretations (of picks for example) that can draw attention to human bias or just a simple evolution of data coverage. These allow for more refined interpretation going forward. All together, the displays provide a tool to discuss and debate as a team to ensure the best data with highest confidence can be used going forward into the AI realm. For more on how IC can visualize your data, click HERE.

As with all things, data integration and collaboration for that big picture, understanding is key to trusting your data. Only then should you engage with your AI toolkits to improve your efficiencies.

Interactive Petrophysics (IP) has a variety of toolkits available to help you to...

Carry out data exploration and data quality control

Clean and repair poor quality data and reduce the impact of borehole instrument issues often found in curve data

Extrapolate and predict key reservoir properties even with limited source data

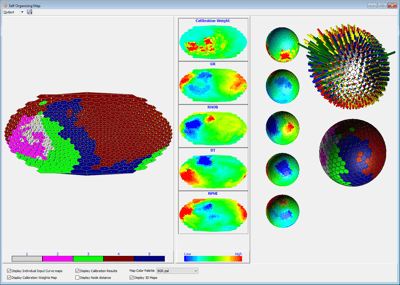

The understanding of how data can be used to build confident and robust interpretations, for facies or rock types, for example, is a core element of how IP can be utilized to achieve a confident and data-driven analysis. As powerful as statistical methodologies may be, interpretations driven by QC'd measured data will always provide a stronger analysis result.

Unique to IP, is the Domain Transfer Analysis (DTA) module. A powerful model-based prediction method based on partial differential equations, which identifies all interdependencies between input variables and target variable.

To read more about what IP offers with regards to Curve Prediction, Rock Typing and Domain Transfer Analysis (DTA) , click HERE.

Use the Chat to Us option to get in touch with any questions.

- Geoactive Team

This years DIGEX conference hosted by GeoPublishing was held in the winter wonderland of Oslo, Norway during the last week of March. It was an...

8 min read

The North Sea has a wealth of history in the exploration and production of oil and gas. It can be easy to assume that, with the number of wells,...

When planning and building machine learning models, a question often asked is, “What features should I use as input to my model?”.